- The Basic Principles of Operant Conditioning

- Operant Conditioning in the Skinner Box

- +R and the Skinner Box

- -R and the Skinner Box

- +P and the Skinner Box

- -P and the Skinner Box

- The Computer Is Always Effective & Efficient

- The Computer Is Always Aversive

- Scan & Capture

- Shaping

- Secondary Reinforcers

- Instinctive Drift

Operant Conditioning & Modern Science

Burrhus Frederic Skinner (1904 – 1990), a professor of psychology at Harvard University, created the term “operant conditioning” in the 1930s.

Now Skinner did not invent operant conditioning any more than Newton invented gravity, because operant conditioning is about learning from consequences:

Animals will keep doing things that produce desired consequences, and they stop doing things that generate undesired consequences.

Because of this, animal trainers have been around for centuries, and they have always been effective in teaching animals from different species:

- 2000 BC, people used falcons for hunting wild animals.

- Alexander the Great conquered the world with his famous horse Bucephalus around 350 BC.

- In 210 BC, Hannibal led an army of war elephants across the Alps.

- In the 15th century, pigs were used as truffle hunters.

- In the 16th century, Antoine de Pluvinel schooled war horses.

- In 1788, the first training facility was established to teach dogs to help the blind.

- In the 19th century, the first school for police dogs was founded.

Although the principles of operant conditioning exist since the dawn of time, Skinner did create the term “operant conditioning”.

And he did codify the basic principals.

The Basic Principles of Operant Conditioning

Operant conditioning is learning from consequences.

Operant conditioning is learning from consequences.

An animal learns, “when I do this, it has consequence XYZ”, where XYZ, for example, is delivery of food, or the arrival of electric current, or the removal of electric current, or the addition or removal of other desired or undesired stimuli. Behavior that is followed by pleasant consequences is likely to be repeated, and behavior followed by unpleasant consequences is less likely to be repeated.

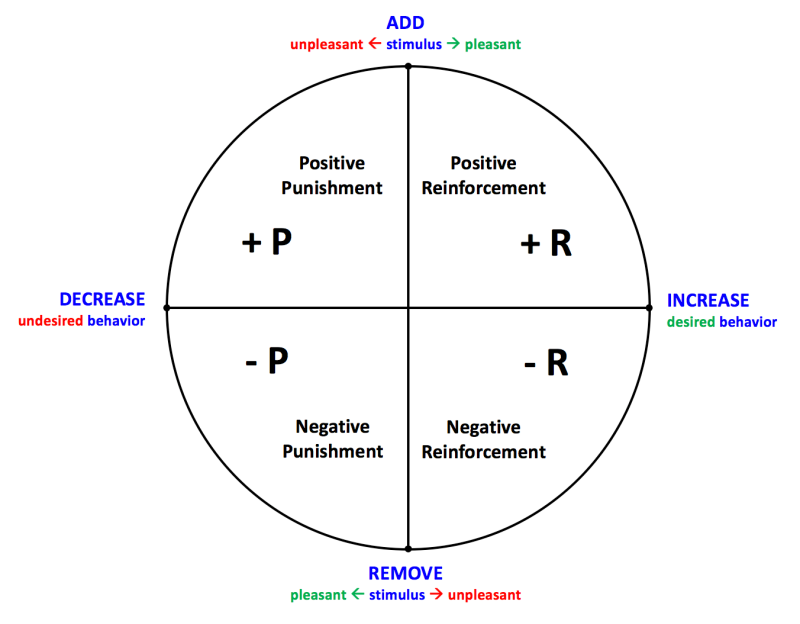

There are four types of consequences in operant conditioning.

These types are placed into two categories: reinforcement and punishment.

- Reinforcement is a desired consequence – something pleasant, non-aversive happens. Reinforcement is used to increase and create behavior. Behavior which is reinforced tends to be repeated (strengthened).

- Punishment is an undesired consequence – something unpleasant, aversive happens. Punishment is used to decrease and stop behavior. Behavior which is punished tends to be extinguished (weakened).

So reinforcement and punishment can be used as strategies to motivate an animal to do or not do certain behavior.

In these categories, a stimulus can be added (positive) or removed (negative).

Reinforcers are stimuli used to increase desired behavior:

- The addition of a pleasant stimulus is called positive reinforcement (+R)

- The removal of an unpleasant (aversive) stimulus is called negative reinforcement (-R)

Punishers are stimuli used to decrease undesired behavior:

- The addition of an unpleasant (aversive) stimulus is called positive punishment (+P)

- The removal of a pleasant (desired) stimulus is called negative punishment (-P)

~

Note that the terms positive and negative are about adding and removing, so they have nothing to do with being kind or not kind.

And the term aversive is about causing avoidance, and punishment is about decreasing behavior such that it is less likely to occurs in the future. It says nothing about being scary, painful, or aggressive.

Operant Conditioning in the Skinner Box

B.F. Skinner invented mechanical-based operant conditioning to produce behaviors with rats and pigeons:

- To put natural behavior under stimulus control. An example of stimulus control is that we, as a human, stop for a red traffic light. So the stop sign (stimulus) has us under control.

- To condition behavior that was not necessarily natural to the animal. For example, he could teach a pigeon to make a full turn when food was used as a reward:

Skinner developed the model of operant conditioning by doing thousands of experiments with rats and pigeons.

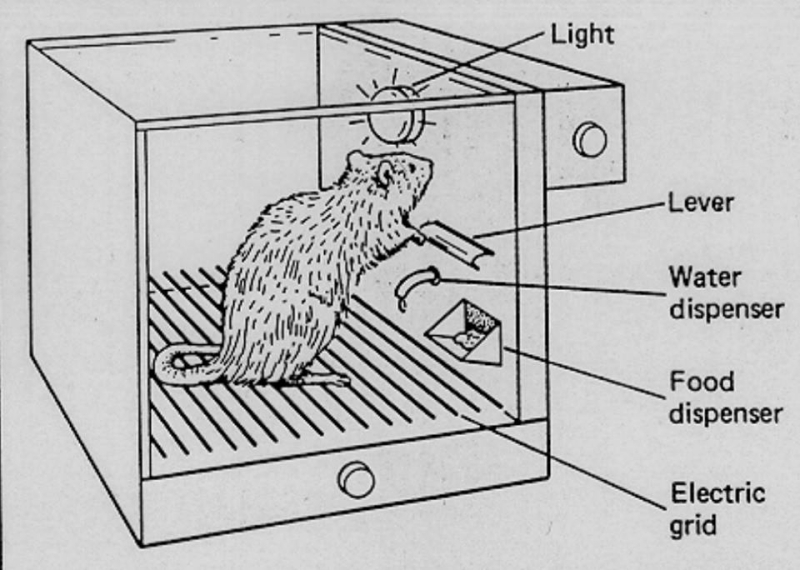

To study operant conditioning, Skinner invented the “operant conditioning chamber”, also known as the “Skinner Box”, which was a small mechanical device.

In the Skinner Box, the computer-controlled operant conditioning was invented to produce animal behavior on purpose.

The experiments in the Skinner Boxes were done to prove that behavior would be repeated by a rat or pigeon when motivated in one of the four ways:

- Positive punishment: +P

- Negative punishment: -P

- Positive reinforcement: +R

- Negative reinforcement: -R

~

Note that I am aware that this article can easily start a discussion about what is morally and ethically “right” and what is “wrong, and whether or not Skinner boxes are cruel or not, but let’s not go there.

The reason why I post the videos here is because there are a lot of Skinner-based training methods nowadays, for example, clicker training.

Also, people who embrace the “+R only” or “all-positive” perspective use the “positive” bias (in the meaning of “nice”) of the Skinnerian ideology.

And the mainstream behaviorists of today have followed the Skinnerian path, so it’s good to know more about their source:

The Skinner Box set-up

Skinner’s scientific research was a neutral, rational, systematic, and planned process to increase knowledge about animal behavior.

Rats and pigeons where used in experiments to collect data.

To be able to make scientific conclusions, it was important that all experiments and research were repeatable.

That’s why the whole setting of the operant conditioning experiments in the Skinner Box was standardized and computer-controlled to avoid uncertainty:

That’s why the whole setting of the operant conditioning experiments in the Skinner Box was standardized and computer-controlled to avoid uncertainty:

- All Skinner Boxes had a predefined standard and pristine set up (size of the box, temperature, lighting, discs, levers, food and water dispensers, electric grid, no interaction with other animals etc.).

- The experiments were designed by a human, but the “learning from consequence” process was initially a computer-controlled and mechanical process:

- Reinforcement and punishment were done by a computer.

- The stimulus used in +R and –P was always pleasant.

- The stimulus used in +P and –R was always aversive.

- Stimuli were always well-timed and well-dosed.

+R and the Skinner Box

+R is the abbreviation for positive reinforcement and is about getting desired behavior through the addition of a pleasant stimulus.

The Skinner Box was used to teach, for example, a pigeon how to peck a colored disc. The pigeon learned that picking the disc would produce the reward.

To sum up the key elements of +R in this experiment:

- Desired behavior: peck a disc

- Addition of pleasant stimulus: food

To test +R, the pigeon was kept on ¾ of its normal weight, so the birds were always hungry when subjected to a pleasant stimulus.

At first, the pigeon would peck the disc accidentally, but it didn’t take long to learn that the disc represented a means and a resource to obtain the food.

The consequence of receiving the pleasant stimulus for disc pecking ensured that the pigeon would repeat the desired behavior.

Skinner initially used a continuous reinforcement schedule, which means that reinforcement (food) was delivered after every time the pigeon pecked the disc.

Then Skinner changed the schedule of reinforcement with an intermittent schedule of reinforcement. This is a variable-ratio schedule, which means food is delivered but not after each time the pigeon pecked a disc. This increased the pigeon’s drive to put more effort into it, just as it drives people to gamble.

Check out this video for more details:

-R and the Skinner Box

-R is the abbreviation for negative reinforcement and is about getting desired behavior through the removal of an unpleasant stimulus.

The Skinner Box was used to teach, for example, rats how to push a lever. The rat learned that by pressing the lever, the electric current switched off, so the aversive stimulus was removed.

To sum up the key elements of –R in this experiment:

- Desired behavior: push a lever

- Removal of an unpleasant stimulus: electric current

Initially, the rat would press the lever accidentally, and this way, he found out that pressing the lever would switch off the electric current. The consequence of escaping the aversive stimulus ensured that the rat would repeat the action of lever pressing.

Initially, the rat would press the lever accidentally, and this way, he found out that pressing the lever would switch off the electric current. The consequence of escaping the aversive stimulus ensured that the rat would repeat the action of lever pressing.

Subsequently, in the process of -R, a light would be switched on, just before the electric current. This way, the light was negatively reinforced, and the rat would press the lever once the light would switch on. In other words, when the light flashed – the conditioned reinforcer– the rat could avoid the shock – the primary reinforcer – by performing the desired behavior: pressing the lever.

+P and the Skinner Box

+P is the abbreviation for positive punishment and is about stopping undesired behavior through the addition of an unpleasant stimulus.

For example, a rat in a Skinner’s box that should learn to avoid the lever was given positive punishment by having an electric current turned on if the rat pressed the lever.

To sum up the key elements of +P in this experiment:

- Undesired behavior: touching the lever.

- Addition of an unpleasant stimulus: electric current.

-P and the Skinner Box

-P is the abbreviation for negative punishment and is about stopping undesired behavior through the removal of a pleasant stimulus.

For example, a rat in a Skinner box that had its heat turned off when it pressed the lever would be receiving negative punishment, and should learn to avoid the lever.

To sum up the key elements of –P in this experiment:

- Undesired behavior: pushing a lever.

- Removal of a pleasant stimulus: turning off the heat.

The Computer Is Always Effective & Efficient

Although it might sound weird, some of the best animal trainers and some of the best operant conditioners in the world are machines.

That’s because the computer is always effective in creating and stopping behavior in a controlled Skinner Box,

A machine is always doing the right things scientifically seen:

- The computer is always effectivein creating desired behavior with +R or -R.

- The computer is always effective in stopping undesired behavior with +P or -P.

Besides that, computers are also efficient.

A computer is always doing things right scientifically seen:

- Computers are great at operant conditioning because they have infinite patience.

- And they have perfect timing: they give a clear reward, release or other consequence at the right moment.

With efficient, well-timed and well-dosed stimuli, each animal will learn that “what I do” has a consequence.

The animal learns through operant conditioning and starts to perform desired behavior or stop undesired behavior.

Now a Skinner computer is always efficient and effective, but it’s not computers who train horses.

Now a Skinner computer is always efficient and effective, but it’s not computers who train horses.

Instead, it’s humans.

And they are not always doing things right!

And they are not always doing the right things!

More about this in the article:

- Operant Conditioning & Mother Nature » (COMING SOON)

The Computer Is Always Aversive

In Skinner’s +P and –R experiments, the computer and its stimuli were always aversive.

In the Skinner Boxes, the aversive stimuli, such as the electric current, were always perceived by the animal as unpleasant, undesired, painful or scary.

So the intention with the electric current was being aversive and the horse’s perception about it was that it was aversive.

That’s why nowadays a lot of people make the assumption that:

- Positive punishment is ALWAYS aversive.

- Negative reinforcement is ALWAYS aversive.

However, when people train horses, both punishment (+P) and reinforcement (-R) doesn’t have to be aversive or unpleasant.

- To stop undesired behavior, riders can use +P through a non-aversive vocal stimulus such as “Nope, try again”.

- To get desired behavior, riders can use -R through a friendly touch and the removal of this gentle touch.

So in a human-horse situation:

- Positive punishment and negative reinforcement are NOT alwaysabout doing something unpleasant.

- Humans can use +P to stop undesired behavior, or use -R to get desired behavior by adding or removing a stimulus which is NOT aversive.

And to make it even more interesting:

And to make it even more interesting:

- Some riders intent to be aversive, but it’s not perceived by the horse as aversive.

- Some riders use a pat on the head intended as reward (+R), but their horse perceives this stimulus as aversive! So a human can be aversive at the “wrong” moment!

So we need to be aware of that:

- The computer in a Skinner Box is always aversive.

- The human can be unintentionally and unconsciously aversive when using +R.

- The human’s stimuli are not always intent nor perceived as aversive when using –R or +P.

Scan & Capture

The fundament of science-based operant conditioning is the “scan & capture” technique.

B.F. Skinner invented the mechanically-based operant conditioning, and he taught the basics to his two young assistants, Keller and Marian Breland.

The Brelands helped Skinner to train thousands of rats and pigeons used in various experiments, using the scan & capture technique.

Now with the scan & capture technique, the computer is not influencing the animal in advance.

Initially, no stimulus is used to cause behavior, so it’s about waiting until the animal starts to offer the desired behavior.

In other words, there are no stimuli used BEFORE behavior, only AFTER.

This is how it works:

This is how it works:

- The computer scans and waits for the behavior to happen.

- When the desired behavior occurs, it is captured through +R.

In other words, the scan & capture technique doesn’t use aids, cues, commands, triggers, tools, or whatever to cause the behavior to occur.

It’s a technique that only uses stimuli AFTER behavior occurs to reinforce or punish this behavior.

This means, that the scan & capture technique in Skinner Boxes contained the following “two-step” format:

- Behavior ==> Consequence (reinforcement)

Shaping

In 1943, when Marian was 23 years old, the Brelands discovered this concept of “shaping”.

They proved that a pigeon could learn more complicated behaviors, such as making a full turn, through shaping.

This operant technique involved and required more manual and hand-operated human interaction.

Shaping behavior is about capturing what you get instead of what you want.

So instead of waiting all day for an animal to perform a behavior and then capturing it with a reward, the Brelands began rewarding the slightest try in the right direction with a hand-held food-delivery switch.

This way, a behavior could be gradually “shaped” into the complete desired behavior.

Check out this video to see the process in action:

The individual shaping of a response appeared to be a powerful non-forceful technique.

By capturing the slightest try and then the slightest better the learning process accelerated.

Secondary Reinforcers

At the start of the Skinner experiments, only primary reinforcers and primary punishers where used, the so-called “unconditioned” stimuli:

- By nature, a primary reinforcer is desirable to an animal, such as the addition of food, praise, a scratch or removal of pressure. Nothing needs to happen to have animal understand that these are desirable consequences.

- By nature, a primary punisher is undesirable to an animal, such as the addition of electric current, pressure, a noise that frightens the animal, an aggressive tone of voice directed to the animal, of the removal of food. Animals naturally want to avoid these outcomes.

Since the animal does not need to be conditioned to like or dislike these stimuli they are known as “primary” reinforcers and punishers.

But in 1945, while shaping the tricks, the Brelands discovered the concept of “secondary reinforcers”.

In the process of shaping, they noticed that the animals seemed to be paying attention to the noises made by the hand-held food-switches.

In the process of shaping, they noticed that the animals seemed to be paying attention to the noises made by the hand-held food-switches.

This is a typical form of classical conditioning, which is a more passive form of learning and associative learning.

The Brelands realized that the noise was an important part of the training process itself, and discovered that a sound could communicate to the animal the exact action being rewarded.

So the Brelands where the pioneer of the use of an acoustic secondary reinforcer and the “bridging stimulus” was born.

Using a bridge has been popularized through clicker training in the 1990s, but already in 1945 the Brelands used it to improve timing and to teach animals at a distance:

- Timing: With the sound, they could pinpoint the precise action and bridge the gap between that moment and the primary reinforcer: the food reward. Using sounds such as the noise of a switch or a whistle was an early form of clicker training. Already in the 1940s, the Brelands found the use of a bridge effectively speed up the learning process by increasing the amount of information going to an animal.

- At a distance: Using a bridging stimulus was also the first step to teach animals at a distance. While Skinner mostly trained rats and pigeons in the tiny little mechanical devices to do a single behavior, the Brelands where the first to teach all kinds of animals at a distance. By using acoustic secondary reinforcers such as clicks and whistles they could teach chickens, hamsters, raccoons, monkeys, dogs, cats and other species more complex behaviors. As a result, the Brelands produced the first scientifically trained dolphin show ever in 1955.

Instinctive Drift

Already in the 1960s, the Brelands gained experience with the “failure” of reward-based operant conditioning, and they discovered that every species is receptive to what they called the “instinctive drift”.

The concept of instinctive drift originated when the Brelands taught raccoons to deposit coins into a bank slot.

The concept of instinctive drift originated when the Brelands taught raccoons to deposit coins into a bank slot.

The raccoons were initially successful at this activity but over time began to dip the coins in and out and rub them together rather than drop them in.

Now in nature, raccoons instinctively dip their food into water several times and rub it in order to wash it. Therefore, the adoption of this behavior with coins is instinctual drift.

The raccoons learned the “trick” but then began to drift away from the learned behavior towards more instinctive behavior.

Instinctive behavior isn’t learned. Instead, it’s a natural reaction which often is preferred by the animal over learned and unnatural actions.

So the instinctive drift went against Skinner’s primary rule: Reinforce a behavior and you get more of it.

The raccoons showed that that was just not true. The moment instinct takes over, the raccoon will break the “law” of positive reinforcement.

This also happened when the Brelands trained pigs, chickens and other animals. The scientific “absolute truth” fell apart when put to the test in the real world of Mother Nature.

The Brelands found out that when the instinctive drift comes in, any animal, of any species revert to innate, unreinforced, unconscious, unplanned, and automatic behavior that interferes with operant conditioning and override trained behaviors.

This doesn’t mean we should abandon operant conditioning when training our horse, but we should be aware of species differences.

Scientific study of rats and pigeons in Skinner Boxes and science-based operant conditioning won’t give us ALL necessary information and concepts to train our horses.

More about this in the next article:

- Operant Conditioning & Mother Nature » (COMING SOON)